You must have witnessed the rise of robots performing multiple tasks across industries like healthcare, business, and gaming. It’s not something related to future fiction, it’s reality now. These robots, AI-based tools, and of course chatgpt is the testament of the power of machine learning (ML), deep learning (DL), and artificial intelligence (AI).

No doubt, ML approaches have done wonders in almost every industry. But these algorithms (supervised and unsupervised) need a significant amount of data to get trained on for excellent results. Even the AI based tools or machines can’t performed well if not supervised.

What if I tell you that machines can learn to sit, walk, and talk like us? What if the machines can even perform just like humans in complex tasks? Yes, it’s possible!

It all started with AlphaGo by DeepMind. AlphaGo was developed to play the game Go and remember how it was able to beat Lee Sedol, the best of bests Go player in the history. AlphaGo didn’t learn by copying human moves. It innovated, surprising even Lee Sedol. How?

Your answer is Reinforcement Learning.

What Is Reinforcement Learning?

Remember how you learned to ride a bike? You didn’t just sit down with a manual or memorize formulas. Instead, you got on, fell, adjusted, and tried again. Every time you succeeded, balancing for just a moment, you felt a sense of accomplishment, a reward. That’s the Reinforcement Learning (RL).

Unlike traditional machine learning, which relies heavily on structured datasets, reinforcement learning takes a different approach.

It’s more intuitive, almost human-like (can find highly intelligent strategies on its own).

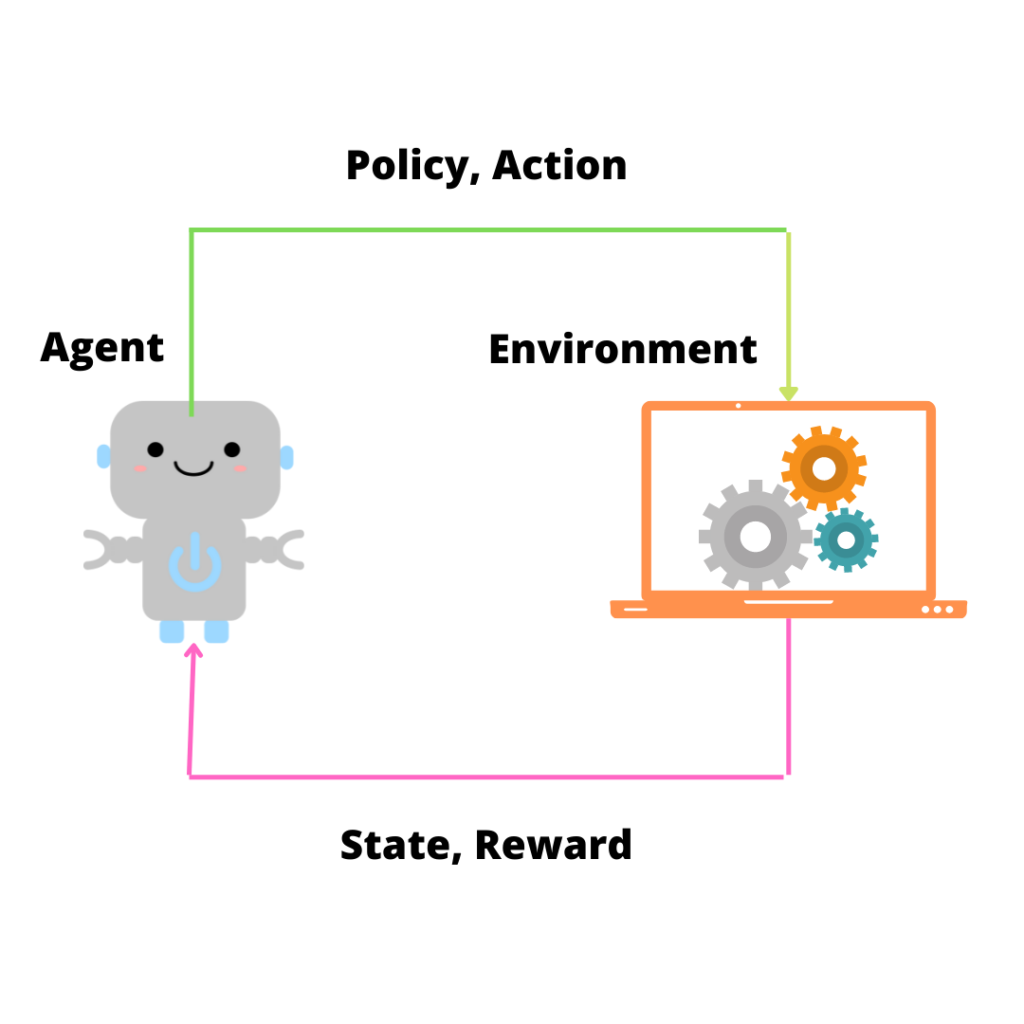

In RL, an agent interacts with an environment, takes actions, and receives feedback in the form of rewards or penalties. Over time, the agent learns which actions yield the best results. It’s this continuous loop of trial, error, and improvement that makes RL so powerful.

Fundamentals of Reinforcement Learning

- Agent: The learner or decision-maker (e.g., a robot, software, or algorithm).

- Environment: The world the agent interacts with (e.g., a simulation, game, or real-world task).

- Action: A decision or move made by the agent.

- State: The current situation or condition of the agent and the environment.

- Reward: A score or feedback given based on the agent’s actions.

Even Meta is working on RL.

Also, Geoffrey Hinton, the godfather of deep learning said,

The key to AI, in my view, is to build systems that can learn and adapt on their own, much like humans do.

Why Reinforcement Learning with Python?

Python has made RL accessible to everyone, from hobbyists to researchers. With libraries like PyTorch, TensorFlow, and OpenAI Gym, you don’t need to start from scratch. These tools provide pre-built environments, neural network frameworks, and everything you need to excel the RL projects without the headache of building it all manually.

Now let’s code a reinforcement learning based algorithm to design a robot that will manage the warehouse and discard the faulty items.

Warehouse Management with Reinforcement Learning

We can train a robot to manage inventory and autonomously identify faulty items and remove them from the inventory. This process reduces human intervention and speeds up the sorting process.

In this section, we’ll break down the components required to set up an RL-based warehouse management system, where the robot’s task is to identify and discard faulty items.

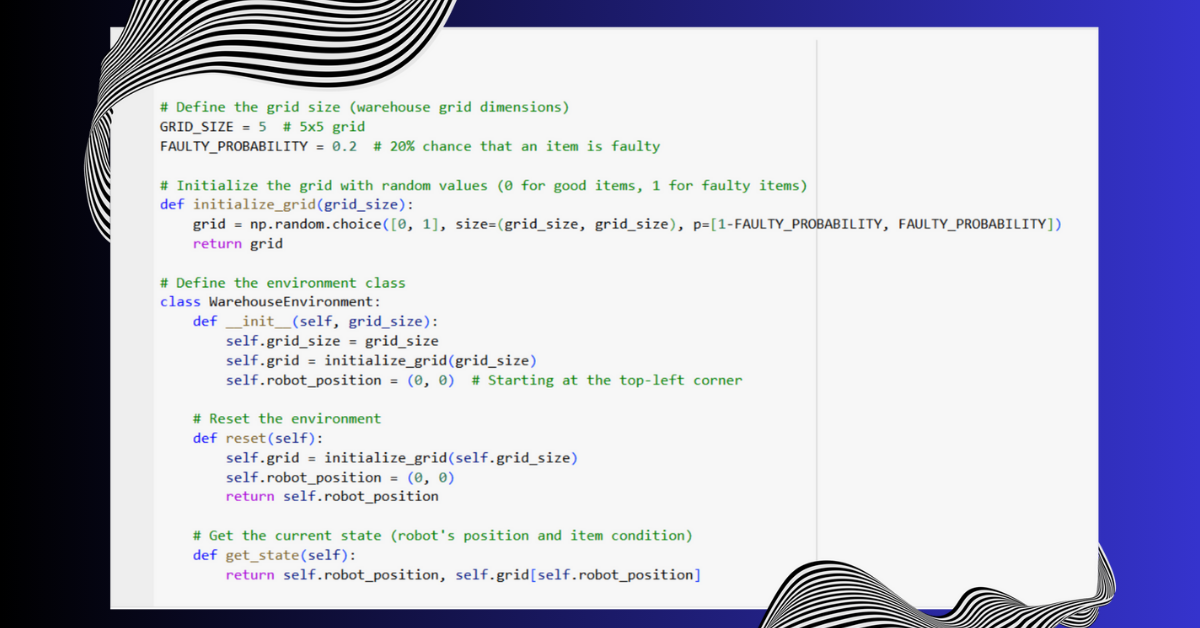

1. Defining the Environment

For warehouse management, the environment can be imagined as a grid representing a storage area, where each cell in the grid contains an item. Some items are defective, while others are in good condition. The goal of the robot is to navigate through the grid, inspect the items, and remove the faulty ones.

- State: The state represents the current condition of the environment. In our case, it is the position of the robot in the warehouse grid and the condition of the item at that position (faulty or good).

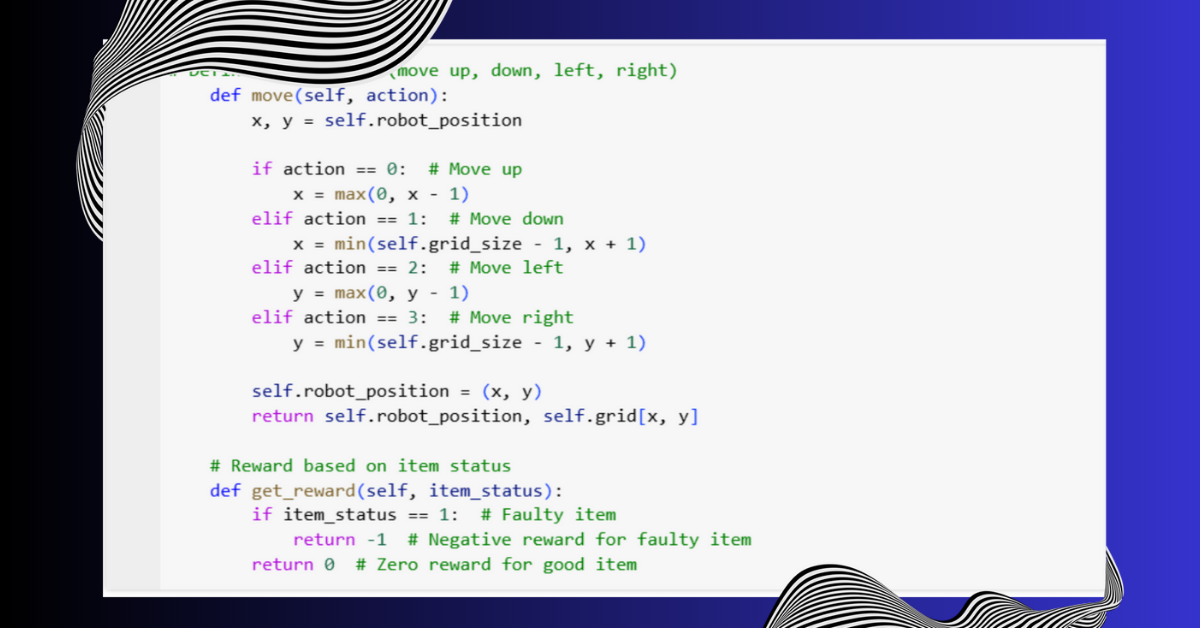

- Actions: The actions are the possible moves the robot can make. The robot can move up, down, left, or right to inspect different items in the warehouse.

- Goal: The robot’s goal is to identify and discard faulty items. This requires navigating through the warehouse and interacting with each item.

In RL, the environment gives feedback to the agent based on its actions.

2. Defining the Agent

The agent is the robot that interacts with the environment. The agent’s objective is to maximize its long-term reward by learning how to make the best decisions.

- Agent’s Actions: The agent (robot) performs actions in the environment to interact with items. It chooses to move in specific directions (up, down, left, right) to inspect items in the grid.

- Q-table (State-Action Value Table): The robot maintains a Q-table that helps it decide which action to take. The Q-table stores values for each state-action pair, indicating the quality of taking a particular action in a given state.

The goal of the agent is to learn the best policy that maximizes the rewards (i.e., efficiently identifying and discarding faulty items) over time.

3. Actions

Actions are what the robot can do in the warehouse. At each step, the robot chooses an action based on its current position (state) and what it has learned so far.

The robot can choose to move in four possible directions:

- Up

- Down

- Left

- Right

In our warehouse example, each action corresponds to the robot moving to an adjacent cell in the grid to inspect a different item.

Action Selection:

The robot’s goal is to maximize rewards by selecting the right actions. However, the robot has to balance between:

- Exploration: Trying new actions to discover better options.

- Exploitation: Using the best-known actions based on past experience to maximize reward.

4. Rewards

In reinforcement learning, the reward is a numerical value given by the environment after the agent performs an action. The reward signals how good or bad an action was in achieving the goal.

- If the robot moves to a grid cell and finds a faulty item, it receives a negative reward (e.g., -1). The goal here is for the robot to avoid faulty items.

- If the robot encounters a good item, it gets a zero or positive reward.

The reward system helps the robot understand which actions lead to successful outcomes and which actions should be avoided. Over time, the robot learns to prefer actions that lead to higher rewards (i.e., it learns to avoid faulty items and move toward good ones).

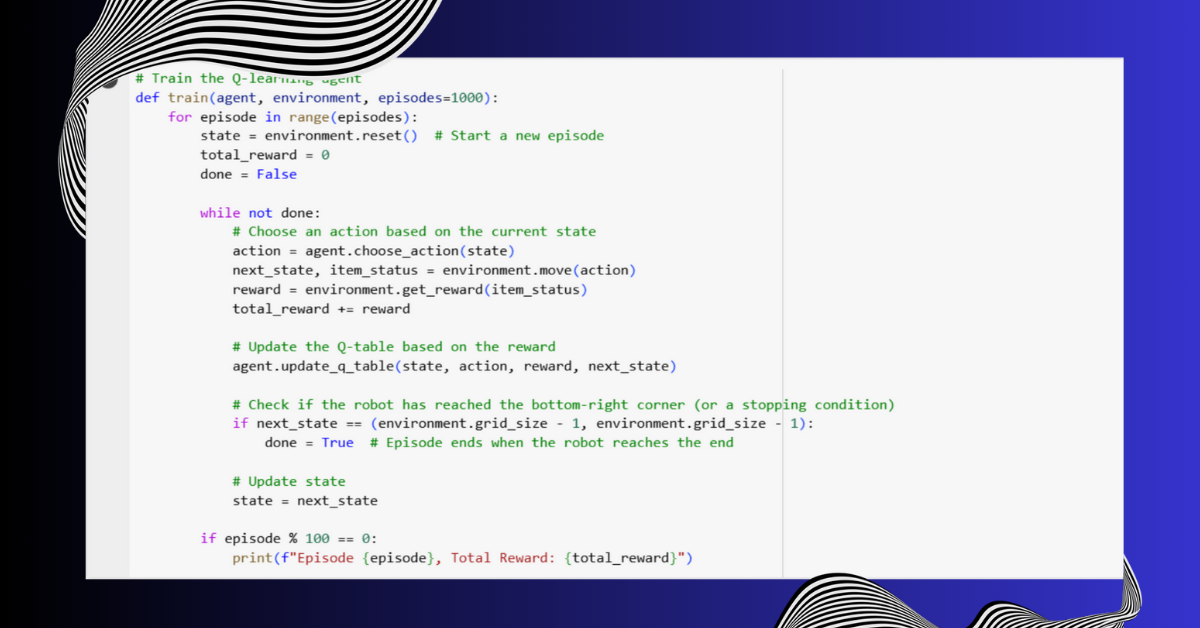

6. Episode Play (Training Process)

An episode in reinforcement learning refers to a complete run of the task, from the initial state to the terminal state. For our robot, an episode would begin when the robot starts at the top-left corner of the warehouse and ends when it has inspected all items or discarded faulty ones.

Key steps in an episode:

- Initialization: The robot begins in the starting state (usually at position

(0, 0)). - Action Selection: At each step, the robot chooses an action based on its current state and its exploration-exploitation policy.

- Environment Feedback: After the robot performs an action, the environment responds with a new state (robot’s new position) and a reward (based on whether the item is good or faulty).

- Q-Table Update: The robot updates its Q-table based on the reward it received. The Q-table helps the robot learn which actions are better in particular states.

- Termination: The episode ends when the robot has either inspected all items or discarded faulty ones. The environment may reset, and the robot will begin another episode to continue learning.

The robot repeats this process for many episodes, gradually improving its decision-making and maximizing rewards over time.