Wondering how to build a simple web scraper in Python? Let me tell you how!

How to Build a Simple Web Scraper in Python?

Web scraping is the process of extracting data from websites and converting it into a usable format, like CSV files. Before initiating this process make sure that you have installed Python on your system.

Now, let’s begin with the procedure.

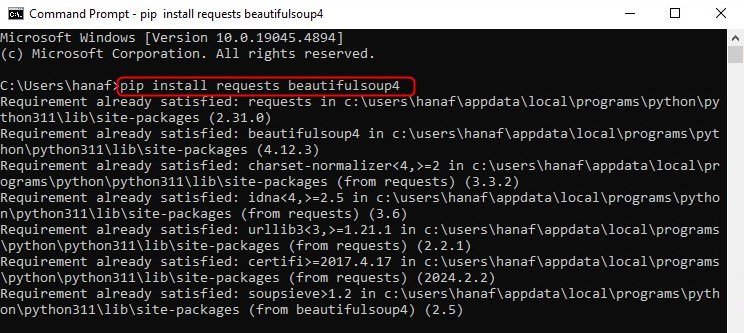

Step 1: Install Required Libraries

First of all, download and install the necessary libraries used for web scraping. In this scenario, just “requests” and “beautifulsoup4” are required:

pip install requests beautifulsoup4

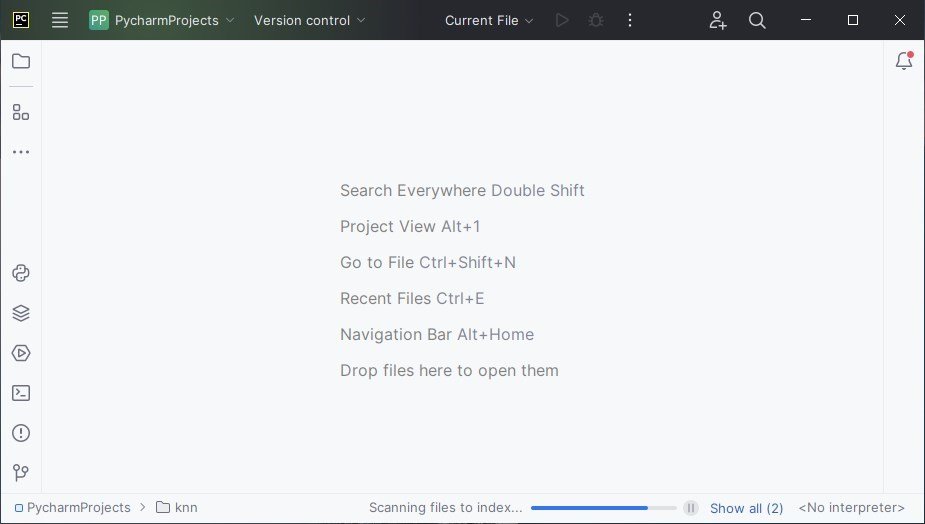

Step 2: Create a Python File

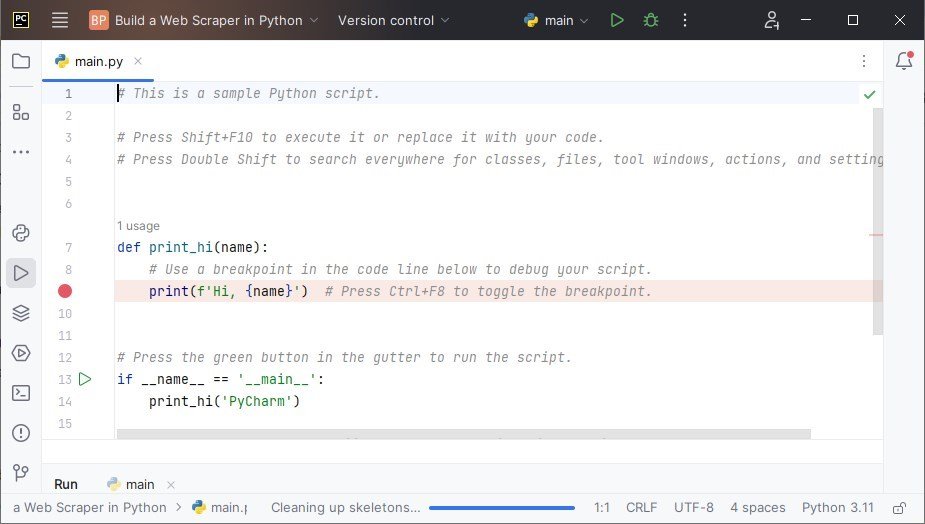

Open your preferred Python IDE to start coding. In my case, I am using PyCharm IDE for the demonstration:

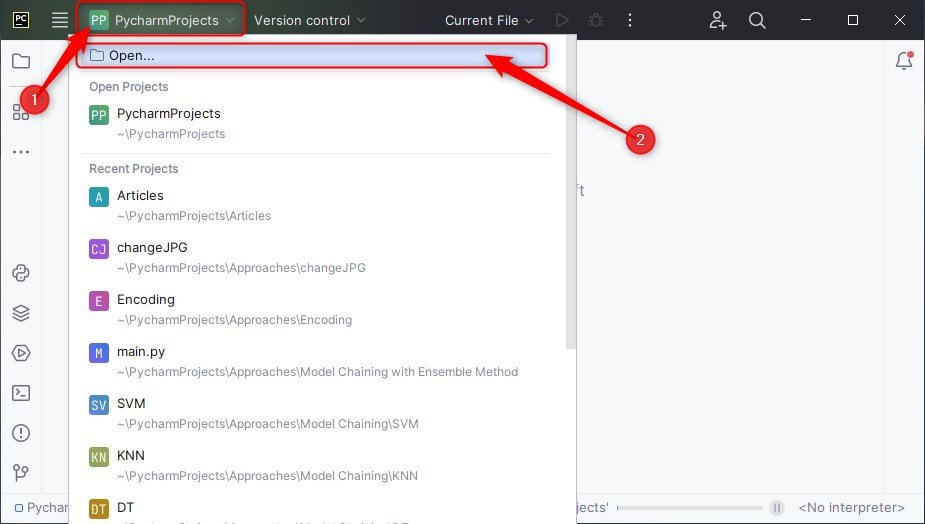

Hit “Open” and go to the particular location where you want to create a Python project:

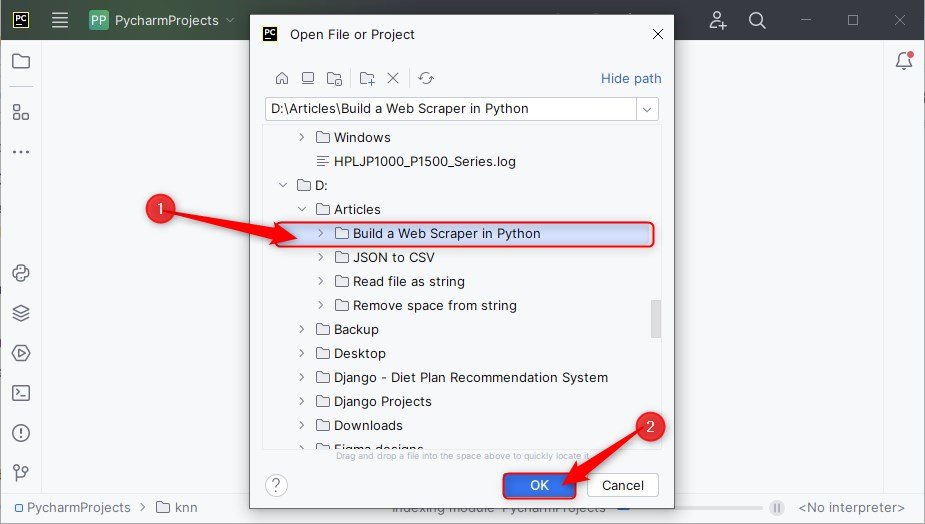

Then, select the folder:

By default, you will get some code in the main.py file. Make sure to clear it:

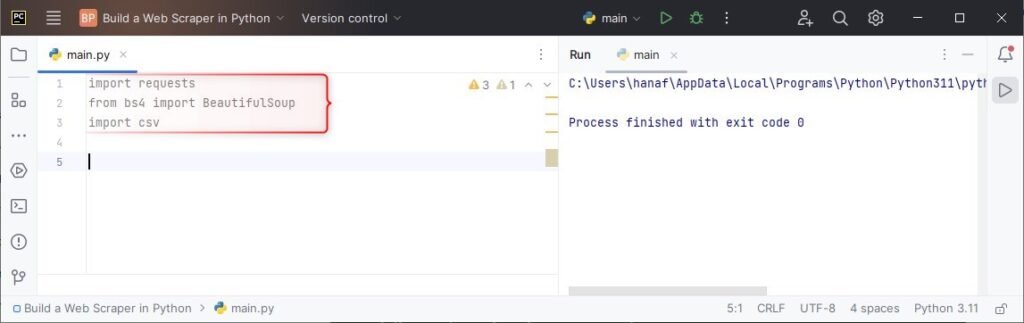

Step 3: Import Libraries

Next, import the necessary libraries:

import requests

from bs4 import BeautifulSoup

import csv

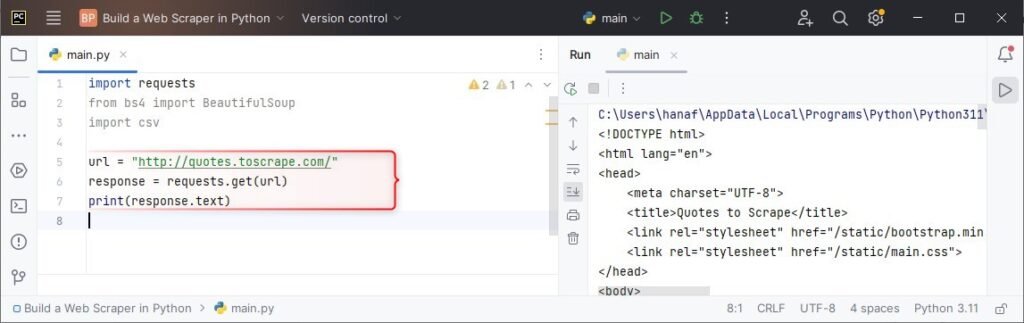

Step 4: Send an HTTP GET Request

After that, define the website URL. Send an HTTP GET request to the URL and save the server response in an object:

url = "http://quotes.toscrape.com/"

response = requests.get(url)

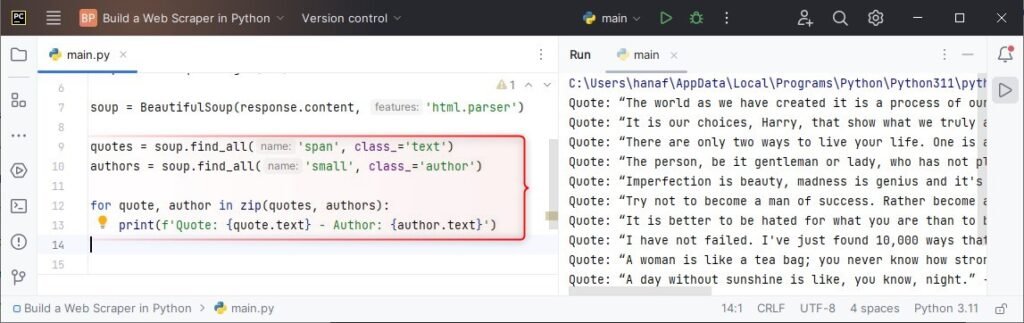

Step 5: Parse the HTML Content

Using the “response” object, get the website content. Parse the HTML content via the “html.parser” and convert it to a BeautifulSoup object:

soup = BeautifulSoup(response.content, 'html.parser')

Step 6: Extract Required Content

Here I am targeting the HTML elements with classes “text” and “author”:

Utilize the find_all() function to find all HTML elements that satisfy the mentioned conditions then print the quotes along with their respective authors:

quotes = soup.find_all('span', class_='text')

authors = soup.find_all('small', class_='author')

for quote, author in zip(quotes, authors):

print(f'Quote: {quote.text} - Author: {author.text}')

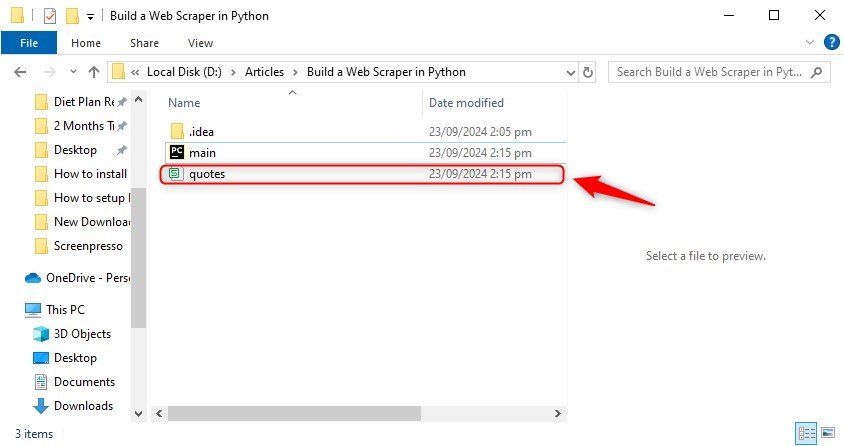

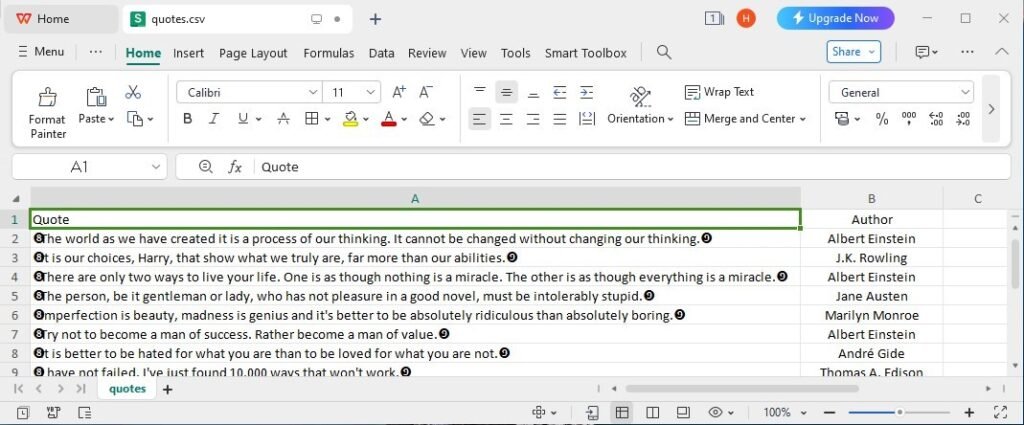

Step 7: Save the Content in a CSV File (Optional)

Lastly, open a CSV file in write mode and save the fetched quotes and author names in it:

with open('quotes.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(["Quote", "Author"])

for quote, author in zip(quotes, authors):

writer.writerow([quote.text, author.text])

print("\nFile Created!!")

Here’s the complete program:

import requests

from bs4 import BeautifulSoup

import csv

url = "http://quotes.toscrape.com/"

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

quotes = soup.find_all('span', class_='text')

authors = soup.find_all('small', class_='author')

for quote, author in zip(quotes, authors):

print(f'Quote: {quote.text} - Author: {author.text}')

with open('quotes.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(["Quote", "Author"])

for quote, author in zip(quotes, authors):

writer.writerow([quote.text, author.text])

print("\nFile Created!!")

Result:

Conclusion

To build a simple web scraper in Python, install the required libraries first. Create a project folder and open it in your preferred Python IDE. Import the libraries and specify the website’s URL you want to scrape.

After that, send an HTTP GET request. Next, parse through the HTML content. Lastly, extract the required content and save it in a CSV file for later use.